The GitMerge 2016 conference was held in New York over two days in April - the first day was the Git core contributors conference, and the second was open to the general public, taking place on the stage of the off-broadway production of Avenue Q.

Day One: Core Contributors conference

The contributors conference was attended by five people from GitHub (including Peff, acting as MC), three each from Google and GitLab, with individual representation from Atlassian, AutoDesk, Bloomberg, Booking.com, and Twitter (all contributors present were male, sadly). The day took an unconference format, with topics suggested on a whiteboard, and discussed with the most popular topics first.

Big Repos and their related performance problems were the first topic suggested, which immediately needed a bit of clarification, because there are at least four ways in which a repo can be ‘big’, and they all have their own problems:

-

Lots of refs (ie branches and tags) - There are at least two scaling problems as the number of refs in a repository grows - the first is that Git can’t read a single ref from a packed ref file without reading the entire packed refs file - for Twitter, this can mean a file of over 100,000 refs - and all of this happening every time you try to tab-complete a branch name! Progress is being made on solving this with the introduction of pluggable ref backends for Git, which will allow the use of a performant key-value store like LMDB. The second problem is that the Git protocol doesn’t yet have any way to say, when connecting to a server for a fetch or push, that the client does not want to hear a full list of every single ref the server has to offer…

-

Large numbers of files - doing a checkout of a branch, or even just calling ‘git status’ can be slow if your working directory has more than 10000 files - this is particularly bad on Windows (where NTFS really slows things down). David Turner of Twitter has created a patch for Git using Facebook’s Watchman file-watching service that can help by tracking the files in your working directory, making ‘git status’ faster - the work to incorporate this patch into Git is ongoing.

-

Deep commit histories - make ‘git blame’ really slow… the git blame algorithm is not simple, and very CPU intensive at the best of times.

-

BIG files - Git Large File Storage has rapidly become the dominant solution to problem of storing large files in Git since it’s launch last year. Git LFS works pretty well for commercial teams, though open-source projects interested in public participation probably won’t find it a silver-bullet for their needs - someone needs to pay for all that bandwidth! GitHub’s LFS support currently requires every individual participant to purchase a bandwidth allowance.

Switching Git to use a cryptographically-secure hashing function has been a topic at Git-togethers for at least half a decade - Git uses SHA-1 for it’s hashing function, which works brilliantly for distribution of object ids, and more or less adequately as a checksum for Git history. SHA-1 is a ‘strong’ hash, but has been considered compromised as a cryptographically secure hash for a long time.

As far as Git goes, it’s possible to debate whether Git needs a hash that is just ‘strong’, or actually cryptographically secure. The original line taken by Linus Torvalds was that the hash is a convenient guard against data corruption (“what you put in is what you get out”), but that in any case, once you’ve got history, it can’t be changed because every time objects are transferred into your Git repo (with a git fetch or pull) any incoming objects that match a hash you already have will be fully checked bit-by-bit against your existing copy of the object. So no matter how weak the hash is, you can’t change history within an existing copy of a repo. This is a great property, but it’s less useful if you’ve never fetched a copy of the repo before! The weakness of the SHA-1 hash is also unhelpful when you want to cryptographically sign a commit- if you want to certify the commit with all it’s history is trusted and you can’t rely on the integrity of the hash, the signature isn’t secure unless it’s been generated by extracting and processing all commits and files of that history.

Given that, updating Git to use a stronger hash function sounds attractive, but like the attempts to sunset SHA-1 SSL certificates, it won’t happen soon. Peff outlined 8 or 9 steps that would have to be undertaken to get Git updated - starting off with the relatively easy step of unifying the code within Git itself that handles object hashes. A new format for extended Git ids would need to be agreed - would everyone just adopt SHA-256, or would the hash name (eg. “sha-256”) become a prefix of the new object id format (as used in Git LFS)? Could new ids sit alongside old? There are parts of the Git data model where object ids are represented as strings (ie within commit object headers, where you could easily add new optional ‘extended-id’ headers, and others, eg ‘tree’ objects, where they’re taken as raw binary data of precisely 160 bits. This means that ‘baking-in’ the new hash, so that it becomes a first-class citizen of Git, would inevitably break backward compatibility.

Breaking backward compatibility is a problem given the massive installed user-base of Git clients & servers - old Git clients would report new repos as corrupt, and just blow up. As well as core-Git, significant Git libraries like libgit2 & JGit would need to be updated. Git hosting services would need to get behind the change - and they would obviously be reluctant to take on the resulting tech-support nightmare of a backward-incompatible change. See also this discussion from the Git mailing list archive.

submodules are a little notorious in the Git world - but Stefan Beller of Google has been working to make them better! A fairly lively discussion around the pain-points of submodules was had, providing plenty of input which was welcomed by Stefan.

submitGit and making it easier for people to contribute to Git was a topic following on directly from Git Merge 2015, where several Git developers expressed their dissatisfaction with Git’s current mailing-list based contribution process. The mailing list works well for the power-users, but it’s a method of contribution that is completely unfamiliar to the majority of today’s Git users - and these are people who could often have a good perspective on how to improve Git’s usability and documentation! There wasn’t anything like a consensus among Git core contributors to move away from the mailing list approach, but it was suggested that it might be possible to create a bridge that provided an alternative, more friendly way to sending patches to the list.

As a consequence, I developed submitGit, a one-way GitHub Pull Request -> Mailing-List tool and announced it to the mailing list in May 2015 where it was appreciatively received. I haven’t been able to spend as much time working submitGit as I’d like, and so it still misses features which limit it’s adoption, but it has still had a positive impact. There have been 44 contributors to Git over the past year, which makes the 23 users of submitGit a significant cohort. Pranit Bauva, a student working on Git as his Google Summer of Code project, has used submitGit for all his patch contributions, after discovering that his internet proxy blocked the email protocols necessary to use the standard Git mailing list process.

There was general agreement from those present at Git Merge 2016 that we could proceed with making submitGit a more ‘official’ tool for contribution - and I still need to complete the documentation updates to make that happen…

Day Two: Main conference

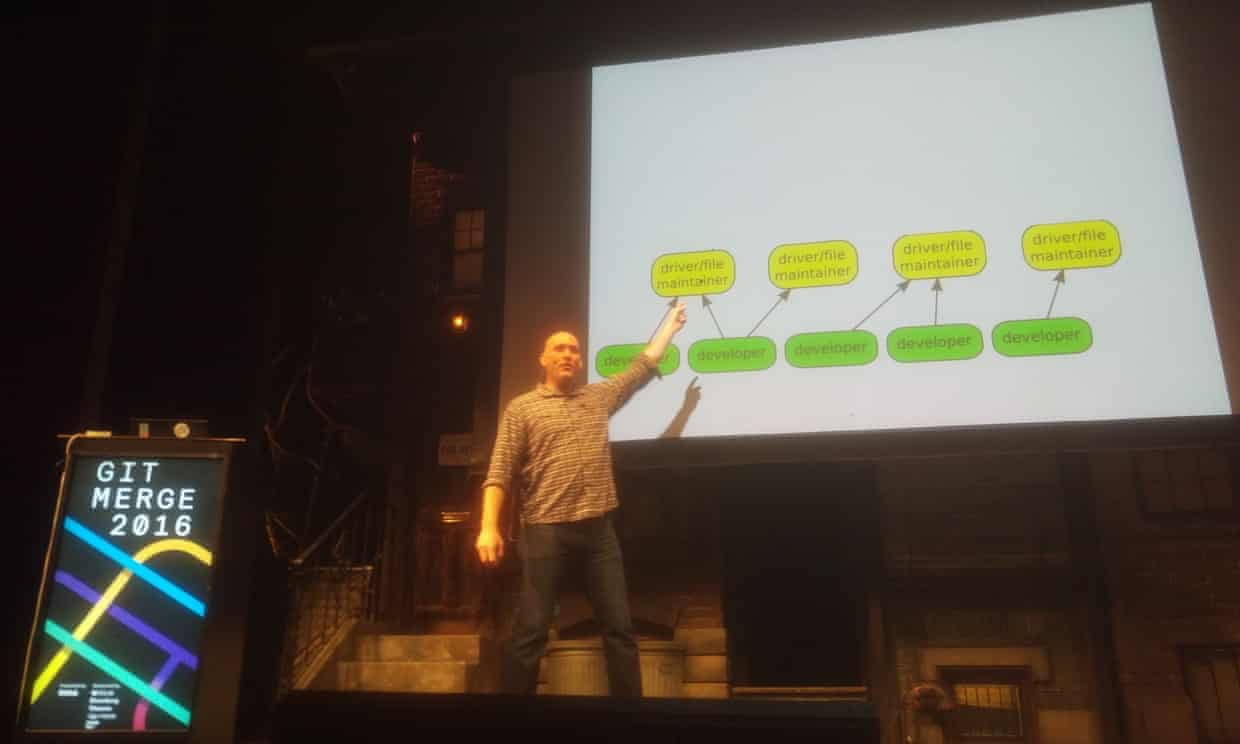

Greg Kroah-Hartman of the Linux Foundation opened the talks with a charismatic presentation on Linux Kernel Development and how it’s thriving with Git, with an ever accelerating number of commits every day. As someone who was trying to free people from mailing-list based contribution, it was very interesting for me to hear his enthusiastic arguments in favour of it - their global society of experienced devs is well-served by the format and pace expectations of email (slower than IRC, giving non-English speakers the opportunity to take their time, run google-translate, etc, when responding to messages).

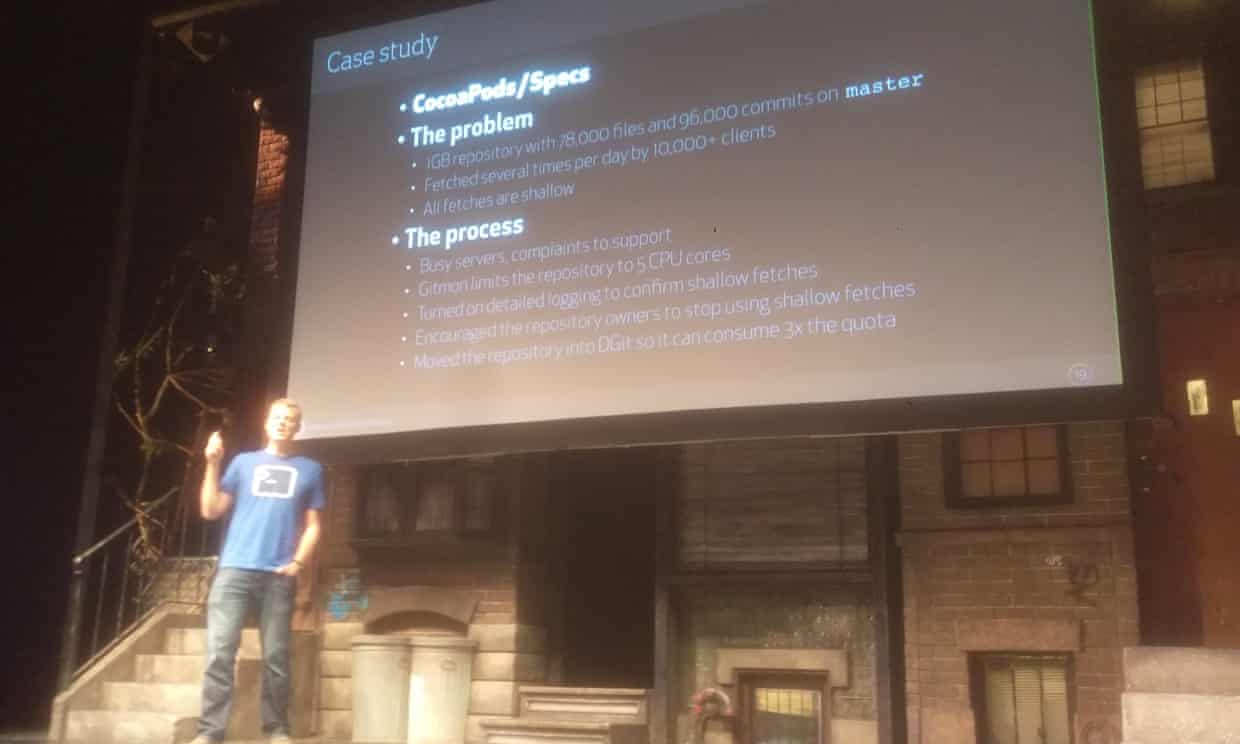

Patrick Reynolds gave a very interesting talk on ‘Scaling at GitHub’ - with a large portion of the problem being the need to ensure that unusual or extreme repositories and their users didn’t take down GitHub for everyone else - certain patterns of behaviour can burn substantial CPU time! Many devs, loving GitHub, have sought to use it to store more than just code and make it into a CDN for artifacts too - this doesn’t always work out…

It was also great to hear more from Tim Pettersen about how Atlassian, GitHub and Microsoft have been collaborating on the open-source Git LFS project - things have come a long way since that surprising coincidence in Paris last year.

Finally, it was nice to see my project the BFG being mentioned in so many talks - several times for the ‘convert-to-git-lfs’ support added in v1.12.5 :

Many thanks to the organisers for a great conference - and to the participants for helping to make Git even better, and more usable!